In the rapidly advancing field of computer vision, the quality and precision of your dataset can determine the success or failure of your project. Whether you’re working on surveillance, sports analysis, or cinematic productions, having a clean and well-curated dataset is crucial. A well-organized dataset leads to the development of highly effective algorithms, models, and systems. However, no matter how advanced the model or algorithm, poor-quality input will lead to unsatisfactory output—garbage in, garbage out.

In our previous posts, we discussed how to visualize videos and search for objects within them. Now, we are taking a significant leap forward with the ability to search for events within videos. This innovation opens up new possibilities for more sophisticated and accurate video analysis.

What is an Event in Video Analysis?

An event in video analysis refers to a sequence of frames where an object moves or changes within the field of view. For example, an event could be a person crossing the street or a car overtaking from the right or left.

Unlike single object detection, event detection involves temporal processing—analyzing the sequence of frames over time to capture the movement and actions of objects. Events provide more context and hold more semantic meaning than isolated objects in a single frame. By addressing events, we can ask more complex and insightful questions about the video content, ultimately enabling the development of more advanced and precise applications.

Event Search: A New Dimension of Video Analysis

Akridata’s Data Explorer is an AI-driven platform designed to streamline visual data curation, reducing development costs by minimizing annotation expenses and eliminating unnecessary training cycles. The latest release of Data Explorer introduces Event Search, an interactive solution that allows users to search for specific events within video files.

This new feature is particularly valuable for fields such as surveillance, sports analysis, and film editing, where understanding and identifying events is crucial.

How to Perform Event Search in Videos with Data Explorer

The Event Search feature in Data Explorer involves a few key steps to accurately identify and retrieve relevant video sequences:

1. Define the Event Length & Stride

An event typically has a specific duration. For instance, crossing the road might take around 10 seconds. By setting this duration, Data Explorer can split the video into sequences of this length. For example, a 10-second event would result in the following sequences: 0–10, 10–20, 20–30, and so on.

However, events may not perfectly align with these sequences. For example, if an event starts at second 4 and ends at second 14, it would be split between two sequences. To address this, Data Explorer allows sequences to overlap by setting a stride duration—the waiting time between consecutive sequences. A 3-second stride would create overlapping sequences like this: 0–10, 3–13, 6–16, 9–19, 12–22, etc. This overlap increases the likelihood of capturing the entire event within a single sequence.

2. Define the Key Frames & Object

Once the sequence duration is set, the next step is to identify the key frames from the query sequence. These key frames are the most representative frames of the event. The user then marks the object of interest within these frames. Data Explorer will use this information to search for similar sequences across the dataset, focusing on the specified object.

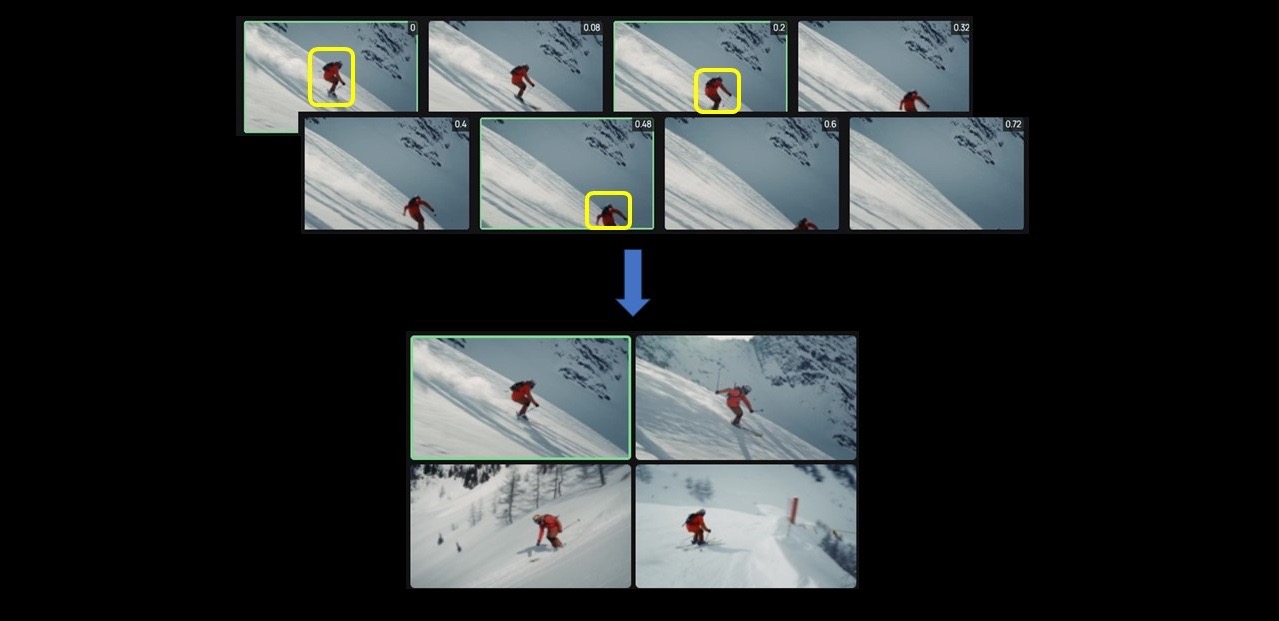

The illustration below shows the process:

- Selecting Key Frames: Choose a few key frames from the full sequence, such as frames 1, 3, and 6.

- Marking the Object: In the selected key frames, mark the object (e.g., a person) that you want to track in the event.

Selecting Key Frames from the full sequence — frames 1, 3, 6 are highlighted in green

Marking the Person as the object in the selected Key Frames

3. View and Analyze the Search Results

After the search is complete, Data Explorer presents the results, showcasing video clips of similar events. Each image in the result set represents a video clip, with the query event highlighted in green. This visual representation allows users to quickly and easily review the relevant events.

Search result of the chosen event — each image represents a video. Top left, highlighted in green, is the query event

Summary: Unlocking New Possibilities with Event Search in Videos

In this blog, we explored how Akridata’s Data Explorer extends its powerful text and visual search capabilities with an innovative solution for event search in videos. This feature is a game-changer, enabling a new set of video processing capabilities and applications across various industries, including surveillance, sports, and film.

By making event search more accessible and efficient, Data Explorer paves the way for more sophisticated and accurate video analysis, reducing costs and development time.

For a demo of Data Explorer or to learn more about how this tool can transform your video analysis projects, visit us at akridata.ai or click here to register for a free account today.

No Responses