Medical devices must meet the highest quality standards to meet patient safety concerns. This means devices must be effective, defect-free, and minimize patient harm. For manufacturers, medical devices must also display consistency and conformity in performance and manufacturing while meeting current regulatory compliance. With AI-driven automated inspection tools, all of this becomes possible, thanks to […]

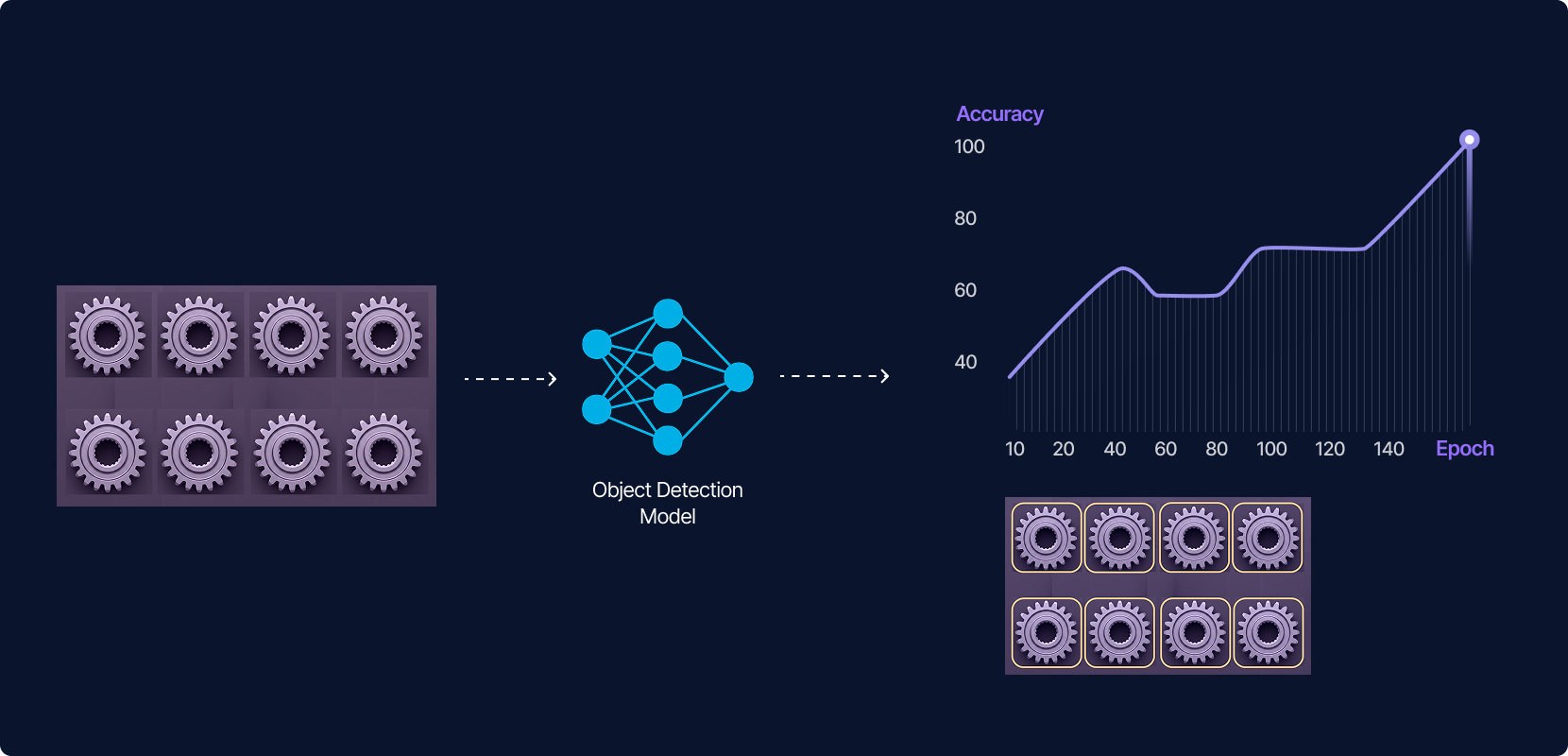

Introduction Object detection has become a cornerstone in computer vision, enabling machines to not only identify what’s in an image but also where it is. From autonomous driving to manufacturing quality control, object detection is transforming industries. However, training robust object detection models can be a complex and time-consuming task. That’s where Akridata comes in. […]

With long-standing production processes in place, and lofty daily throughput requirements, finding the right time to rework and innovate a manufacturing workflow can be a challenge. However, this means that manufacturers often hold on to legacy processes for far too long — processes that actually throttle production capacity and generate excessive operational costs. A common […]

With the recent major advancements in AI and machine vision technologies, the appeal of using high-accuracy automated inspection systems to improve manufacturing quality control is quite clear. But, are the up-front implementation costs worth it? When evaluating whether or not AI inspection technology is right for your production process, it’s important to take a holistic […]

Manufacturers of medical devices and critical components consistently struggle with quality control. An over-reliance on manual inspection — and the inherent human error that comes along with it — regularly leads to both financial and environmental waste, as well as potential harm to consumers and to the manufacturer’s brand reputation. Today, however, automated inspection systems […]

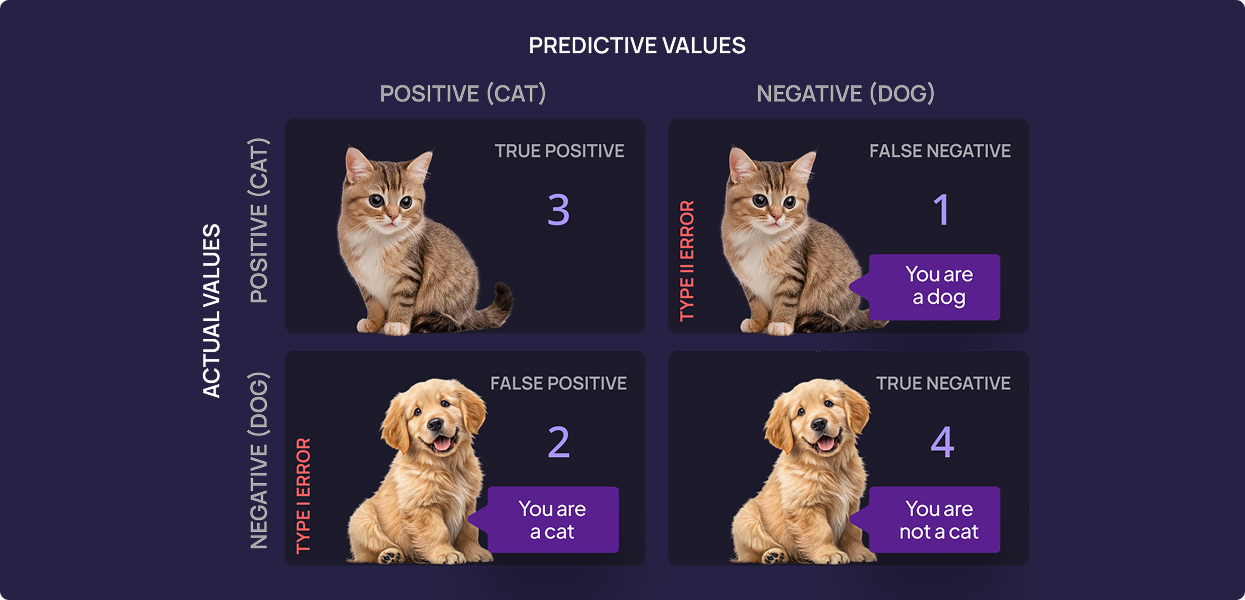

Introduction Image classification is a fundamental task in computer vision with applications in medical imaging, autonomous driving, security, and e-commerce. As deep learning models advance, evaluating them correctly ensures reliability, minimizes errors, and enhances real-world performance. Many data scientists rely on accuracy as the primary evaluation metric. However, accuracy can be misleading, especially when dealing […]

The digital age thrives on visual content, from social media graphics to blockbuster movie visuals. Visual storytelling has become indispensable for brands, artists, and creators aiming to captivate their audiences. Enter artificial intelligence (AI), a game-changer in visual content creation. AI tools are enabling creators to produce high-quality visuals faster and more efficiently, democratizing design […]

In an era of rapidly advancing technology, understanding the nuances between different methodologies is crucial for professionals and enthusiasts alike. Two terms that are often used interchangeably but are distinct in their applications are computer vision and image processing. At Akridata, where we leverage deep learning for advanced image inspections in product manufacturing and asset […]

In recent years, the integration of machine vision systems in robotics has revolutionized industrial processes, automating tasks that once required human intervention. From manufacturing to asset monitoring, these systems have expanded the possibilities for robots, making them more efficient, precise, and capable. In this article, we’ll explore the fundamentals of machine vision in robotics, its […]

Deep learning, a subset of machine learning, has revolutionized industries ranging from healthcare to manufacturing. At the heart of this transformation lies optimizers – key components that fine-tune deep learning models for superior performance. In this guide, we’ll explore what optimizers are, their significance, types, and how they influence the development of high-performing computer vision […]